ONNX Runtime is an open source project that is designed to accelerate machine learning across a wide range of frameworks, operating systems, and hardware platforms. Today, we are excited to announce ONNX Runtime release v1.5 as part of our AI at Scale initiative. This release includes ONNX Runtime mobile, a new feature targeting smartphones and other small storage devices. This capability extends ONNX Runtime to support the optimized execution of Machine Learning (ML) models in edge scenarios. Edge includes any compute enabled devices such as PCs, smartphones, special-purpose embedded devices, or IoT devices.

ONNX Runtime is the inference engine used to execute ONNX models. ONNX Runtime is supported on different Operating System (OS) and hardware (HW) platforms. The Execution Provider (EP) interface in ONNX Runtime enables easy integration with different HW accelerators. There are published packages available for x86_64/amd64 and aarch64 or developers can build from source for any custom configuration. Today’s announcement enables developers to create an optimized package for the ONNX Runtime to be used across the diverse set of edge devices. This package supports the same APIs for the application code to manage and execute the inference sessions.

ONNX Runtime is the inference engine used to execute ONNX models. ONNX Runtime is supported on different Operating System (OS) and hardware (HW) platforms. The Execution Provider (EP) interface in ONNX Runtime enables easy integration with different HW accelerators. There are published packages available for x86_64/amd64 and aarch64 or developers can build from source for any custom configuration. Today’s announcement enables developers to create an optimized package for the ONNX Runtime to be used across the diverse set of edge devices. This package supports the same APIs for the application code to manage and execute the inference sessions.

AI is infused in applications to solve many scenarios, delivering rich user experiences and efficient workflows for different tasks. These applications make use of runtime engines from the host platform (OS) or package the library to execute the ML models. Also, the disk footprint and in-memory size for these applications need to be reasonably small to optimize resource consumption on iOS / Android smartphones, Windows 10 PCs and other edge device categories.

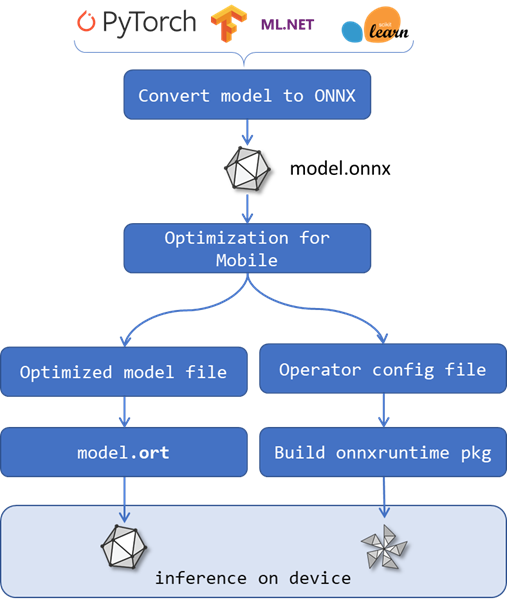

These requirements motivated us to develop the ONNX Runtime mobile feature. Developers can create a smaller runtime package to use in client devices for executing their ONNX models. The size reduction is achieved by building the runtime package as a custom binary for a user-defined set of ONNX models and by using a new optimized file format for the model file. Details for the ONNX Runtime mobile packaging steps are available on GitHub.

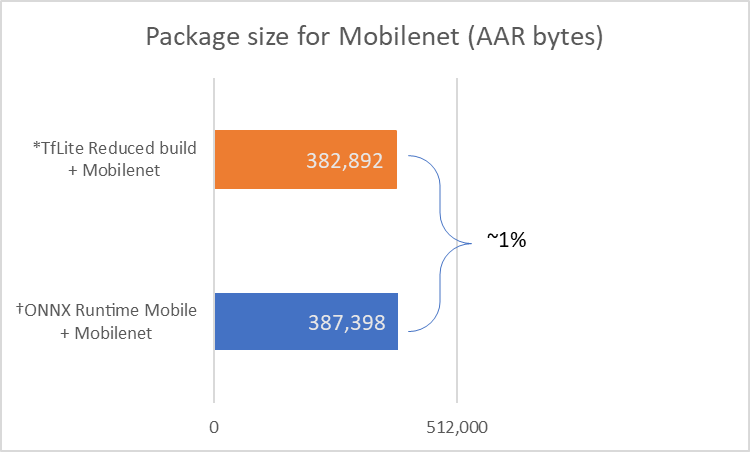

ONNX Runtime mobile can execute all standard ONNX models. The size of the runtime package varies depending on the models you wish to support. As shown in the chart below, the size of the ONNX Runtime mobile package for Mobilenet is the same (~1% difference) as TensorFlowLite’s reduced build package.

*TfLite package size from: Reduce TensorFlow Lite binary size

†ONNX Runtime full build is 7,546,880 bytes

ONNX Runtime v1.5 Updates

The ONNX Runtime v1.5 update is released today. Here are some of the highlights of the updates for this release for Inference and Training feature areas. Full details can be found in the release notes.

Inference features

- Reduced Operator Kernel build

- Transformer model performance optimizations

- Improved quantization support for CNN models (performance, per-channel quantization)

- Execution Providers

- CUDA: updated to CUDA 10.2 / cuDNN 8.0

- TensorRT 7.1 support

- OpenVINO 2020.4 support

- DirectML updates for more complete operator support

- NNAPI major updates for better usability and operator support

- MIGraphX updates for more complete operator support and improved performance

Training features

Today we introduce updates for improved developer experience to use ONNX Runtime training in your distributed training experiments:

- New and improved API to simplify integration with PyTorch trainer code

- Updated CUDA 11 / cuDNN 8.0 support to accelerate in NVIDIA A100

We announced the preview of the training feature in ONNX Runtime during //build 2020 as part of the AI at Scale initiative.